Email categorization using local language models

Summary

This project explores using local large language models (LLMs) to categorize emails, so that I can simplify and have more control over my email management. The goals are threefold:

- compare the classification performance of many locally available LLMs

- learn about the applications of structured outputs and grammars

- build a lightweight classifier for scalable email organization while using the best performing LLM as a tool to generate training data

Using a hand-labeled dataset to measure classification accuracy, I found a wide variety across LLMs to perform email classification. Smaller models often to performed less well than larger ones. There were diminishing returns related to model size, and accuracy plateaus after ~8B parameters. “Classical” machine learning classifiers like support vector classifiers (SVCs) perform well in this classification task and can be trained on labels generated by the best performing LLMs. I walk through the dataset generation process, using LLMs for classification, and performance quantification.

Code for this project can be found on GitHub.

I didn’t use LLMs to write this post. However, I did use them to help write the project code and identify poorly explained concepts within this post.

Background

Emails. Every day I receive so many of them. Most are useless, some are important. The most annoying part is having to scroll through each one to determine which are important and worth keeping. It’s a draining procedure, so I decided to create this project to automate this task as much as reasonably possible. I ultimately want to automatically classify the general type of the email, so I can decide on its relative importance. I know there are people out there who are experts at setting up inbox rules, and quite possibly the popular email providers might already have a service that does what I want.

However, given the recent hype around the capabilities of large language models (LLMs), I was curious to see how well a modern technology like LLMs could accomplish this task. Since I don’t want AI companies to have access to the contents of my emails (ignore for now that I use GMail), I will run a set of local LLMs and compare their performance against each other. I’m not going to stop there – I’m going to make it slightly more complicated for the LLM by asking it to assign each email to a category (like “receipts” or “travel”), and use the predictions from the “best performing” LLM to train a lightweight classifier with the goal being to use this classifier on future emails to help me organize them. The plan is to:

- Download and organize my emails to create a dataset.

- Run an unsupervised analysis, develop a sense of the different types of emails I need to classify, create categories. (Become one with the emails)

- Create a supervised dataset to assess performance.

- Write a strong prompt to instruct the LLM to classify each email.

- Coerce the LLM to respond with one of the pre-defined categories without extra fluff.

- Compare LLMs, select the two best performers.

- Run on a larger set of emails to create a training dataset, train a lightweight classifier.

Downloading and organizing the emails

The first step in this project is to create a dataset that contains all of the emails still in my inbox. I want to include as much useful information as possible in the LLM’s prompt to help it classify the emails – like the subject, body, sender, and date. Long story short, I connected to the GMail server, downloaded all the emails, formatted them into a list of dictionaries, and created a polars.DataFrame (see GitHub for code).

def process_email(email) -> dict:

# process email ... represent as dict

return {

'subject': ...,

'from': ...,

'date': ...,

'attachments': ...,

'body': ...,

}

email_df = pl.DataFrame(map(process_email, emails)) # polars DataFrame

# save DataFrame

This DataFrame serves as the backbone for all subsequent steps. All analysis will create variations of this DataFrame.

Initial (unsupervised) analysis

Exploring the embedding space used in the final classification step.

Embeddings

I want to understand how the contents of each email relate to the others, and if there are clear categories that can be extracted from the structure of the data. To do this, I need to represent each email numerically. There are multiple ways to do this, but the approach I’m going to take here is to use a language embedding model. Embedding models take a block of text and transform it into a multidimensional vector. The combination of values the vector contains assigns semantic meaning (often multiple meanings) to the vectors, and vectors that are close together in the embedding space share similar semantic meanings (i.e., “child” is similar to “kid” but “child” is very different from “sun”). Embedding models are often trained to specialize in different sub-tasks, so it is unclear which model will produce the best embedding for this task. Therefore, I selected two embedding models, each embedding text into a 768-dimensional vector with a maximum context window of ~8,000 tokens (which is large enough to encapsulate the majority of the emails):

Any email longer than 8,000 tokens will be truncated when it is fed into the embedding model, which is not ideal. To reduce this likelihood, I can do a bit of email sanitization. Many emails are written with HTML and we can assume the HTML tags and styling don’t provide useful contextual information for categorization. Thus, the HTML markup can be removed using beautifulsoup4, a great Python package to remove markup and extract the relevant text. Sanitizing the emails reduces the token count by an order of magnitude (see the table below).

| Statistic | Token count before cleaning | Token count after cleaning |

|---|---|---|

| Mean | ~4,500 | ~450 |

| 99.5-th percentile | ~52,000 | ~4,300 |

After cleaning, most emails have fewer than 5,000 tokens and thus truncation is rare. Now if an email is truncated, there is enough contextual information to generate a useful embedding (i.e., the email is not saturated with markup noise).

Each email is then embedded with the models above using the SentenceTransformers python package. I assigned pseudo-labels to the emails using agglomerative clustering on the embeddings. I set the number of clusters to be similar to the number of pre-defined categories I will eventually create.

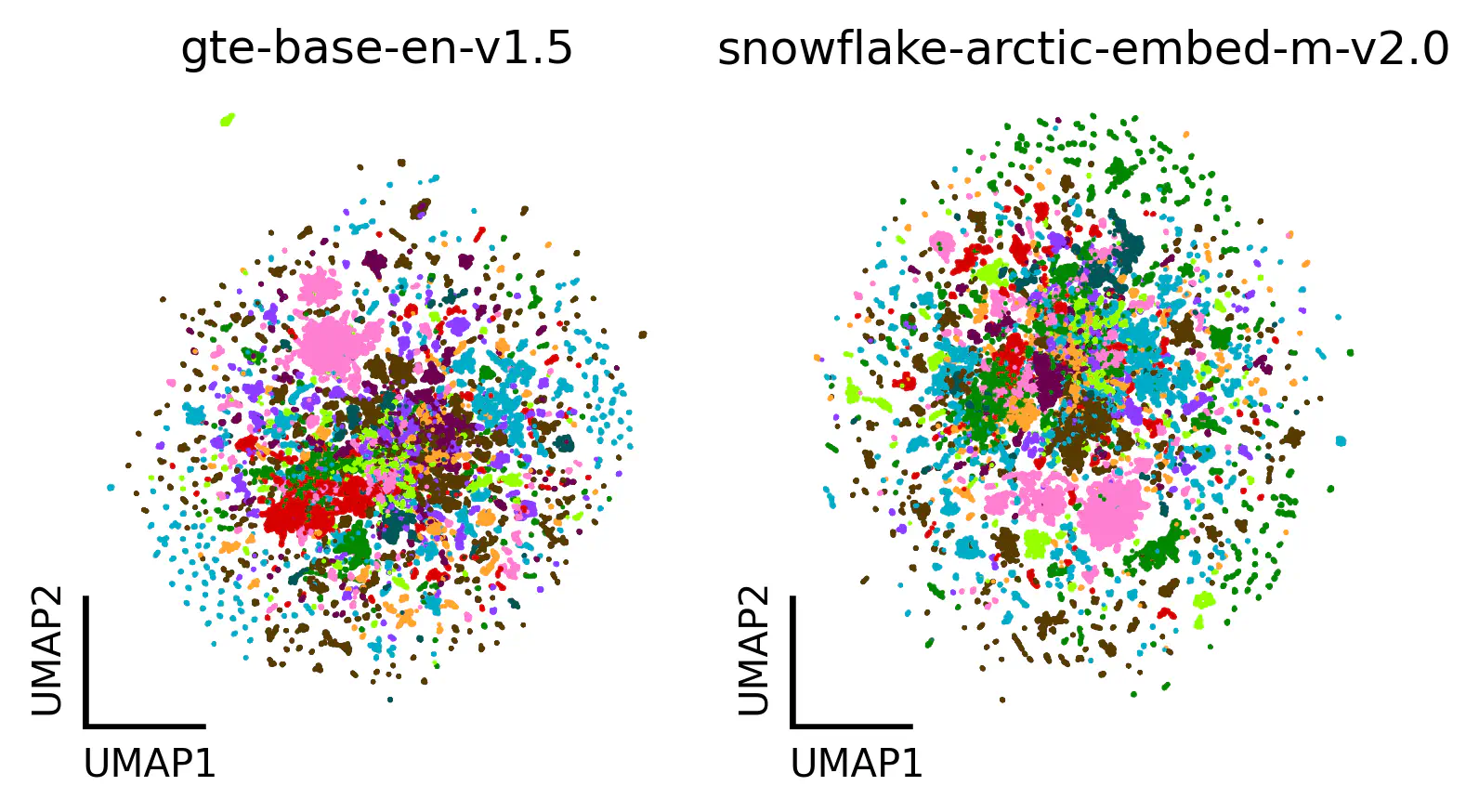

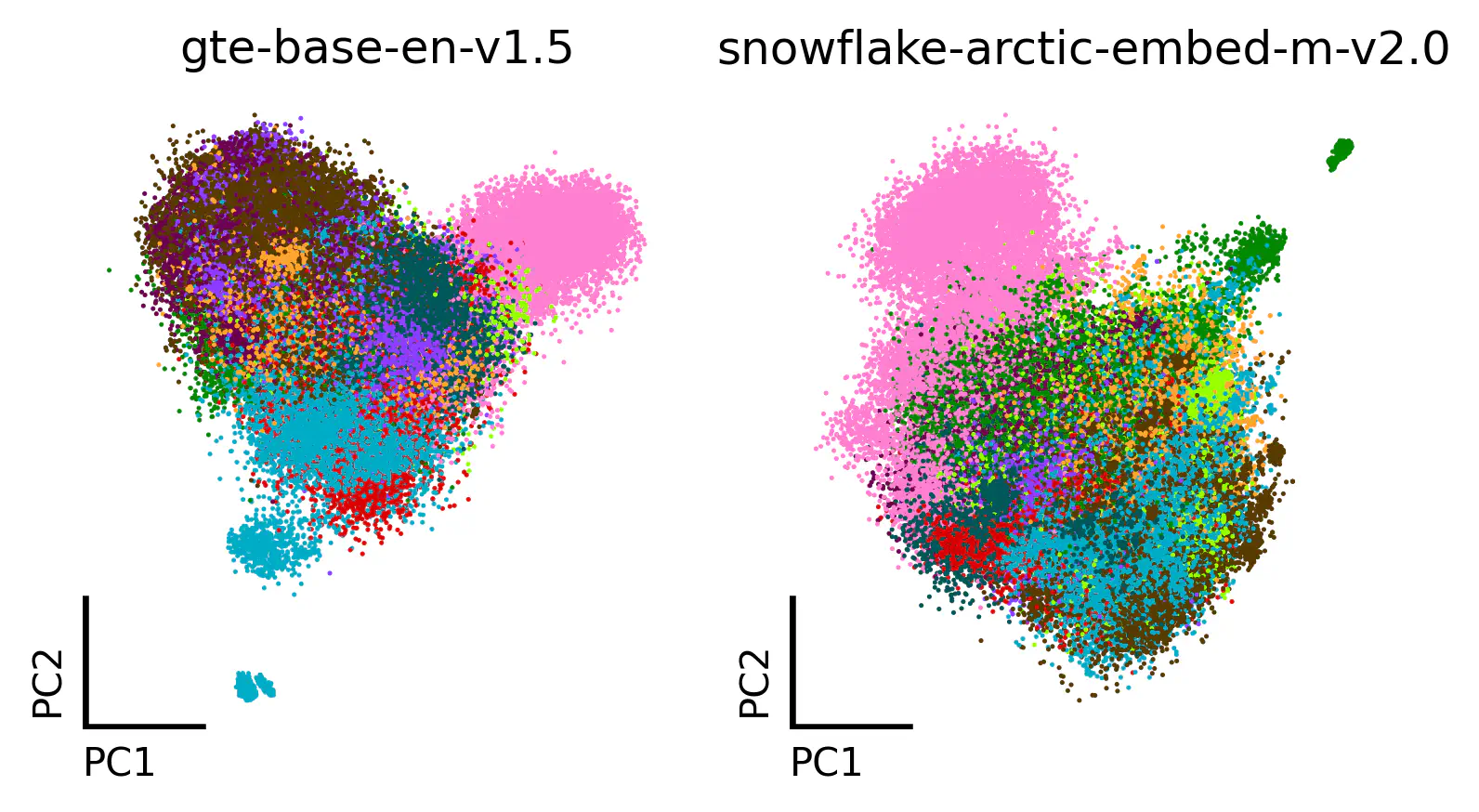

gte-base-en-v1.5 embedding (left) or the snowflake-arctic-embed-m-v2.0 embedding (right). Each point is a different email. Color represents cluster label. Click the button to see the UMAP embedding.Note: Cluster IDs were computed for each embedding separately, which makes it difficult to compare the clusters across embeddings. I attempted to match the clusters across embeddings so that the colors (i.e., cluster IDs) represented the same pseudo-label. See the match_clusters function for more information.

Interpretation – PCA

PCA is a useful tool to look at low-dimensional representations of data. Intuitively, it “rotates” the data so that the first dimension becomes the axis with the greatest variability in the original embedding space (subsequent dimensions explain monotonically less variance). An important feature of PCA is that it is a linear operation; local and global relationships between individual points (i.e., pairwise distances between emails in the embedding space) are preserved. Looking at the data through this lens helps us understand how separable the pseudo-labels are (and by indirect extension the pre-defined categories), and how simple the categorization task might be. Importantly, we are only looking at two dimensions of the embedding space, and we shouldn’t make sweeping conclusions about the data from this representation alone.

We see a general separation of pseudo-labels, but also significant overlap. This overlap could be caused by an inadequate view of the embedding space. For example, it’s possible that if we added some more dimensions to the PCA visualization, we could see more separation. Switching up the visualization might help disambiguate. For that we’ll move on to the next section.

Interpretation – UMAP

Another tool that emphasizes local relationships rather than global relationships is UMAP (click the button above to show the UMAP plot). It non-linearly projects the data into a low-dimensional space, while attempting to preserve any underlying manifolds that may exist within the data. Because the techniques it uses non-linearly manipulates the data, it is more difficult to interpret global structure. Often, it is used to identify clusters of similar datapoints (see RNA sequencing analysis for good examples of this) which is how I intend to use it.

The UMAP representation produces an interesting view of the embedding space. Once again, there is a general separation of pseudo-labels, where emails with the same label tend to cluster together. In this view, we can actually see that there are many (>100) small “islands”, where a few emails are clustered together, separate from others. The islands seem to exist for all pseudo-labels, indicating that the emails within the islands have a sufficiently different embedding representation than other emails from the same cluster. Additionally, there is clear overlap across pseudo-labels in the center of the plots.

Interpretation – defining categories

I was hoping that visualizing the embeddings would reveal some sort of structure I could take advantage of for defining categories with unsupervised techniques. It looks like this could work for one or two categories, but not holistically. The many small islands that exist in the UMAP representation are a reasonable indication of this. The overlap between pseudo-labels indicates that defining categorical boundaries with unsupervised techniques would be tricky; how would I know if the boundaries make sense?

Defining categories via manual analysis

Given the difficulty in using the above unsupervised techniques to create email categories, I opted to manually define them and avoid over-engineering a solution to this problem. I went through a bunch of emails to get a reasonable set of categories that encapsulate the variability of possible emails that I observed throughout the years.

| Category | Description |

|---|---|

| Personal or professional correspondence | Emails with family, friends, colleagues. Have a colloquial tone. |

| Financial information | Bank statements, investment updates, bills, receipts, financial aid, scholarships, grants. |

| Medical information | Doctor’s appointments, prescriptions, medical test results, health insurance information. |

| Programming, educational, and technical information | Coding projects, GitHub notifications, educational resources, Google Scholar emails, technical documentation. |

| News alerts and newsletters | News updates, company newsletters, or subscriptions. |

| Travel, scheduling and calendar events | Flight confirmations, reservations, scheduling, calendar events. |

| Shopping and order confirmations | Online or food shopping, order confirmations, delivery updates. |

| Promotional emails | Marketing, sales, advertisements, promotional content. |

| Customer service and support | Customer service, support, or help desk content. |

| Account security and privacy | Security notifications, data protection alerts. |

| Other | Emails that don’t fit into the other categories. |

They span a large breadth of possible emails but aren’t all-inclusive; “Other” is a catch-all to handle outlier categories. This categorization is good enough for my needs, but not perfect. If I strove for perfect, I would not have finished this project. Ultimately, I ended up with 11 categories.

Creating a supervised dataset

Selecting the data for hand-labeling

Now that I have a set of categories, I can create a hand-labeled (i.e., supervised) dataset using a subset of the emails I downloaded. This is an important dataset, as it allows me to define the main axis for evaluating LLM performance – prediction accuracy. Therefore, I need to make sure I have a decent representation of each category within the dataset. One major concern is that some categories are disproportionately represented, like promotional emails. There are multiple potential solutions to this problem.

The simplest approach is a form of brute force; label random emails until the rarest category has accumulated some threshold of emails. However, this method is time intensive.

A smarter approach could be to sample emails evenly from the pseudo-labels computed in the embedding section. However, there could be an over-representation of certain emails within a category which would bias performance calculations (for example, an over-representation of Google calendar emails from a scheduling pseudo-label). Another issue is that a pseudo-label might not correspond to an individual category (or any category), and sampling from the label will give fewer examples of each true category contained within.

Another approach would be to use the LLMs to categorize a large number of emails. Once multiple LLMs have categorized the emails, you can sample emails from each category, and repeat for each LLM, so that you don’t accidentally bias the performance calculation later on. However, this is quite computationally expensive.

In the end, I did a mixture of the first and last approach. After I selected a subset to hand-label, I needed to set up a streamlined labeling system.

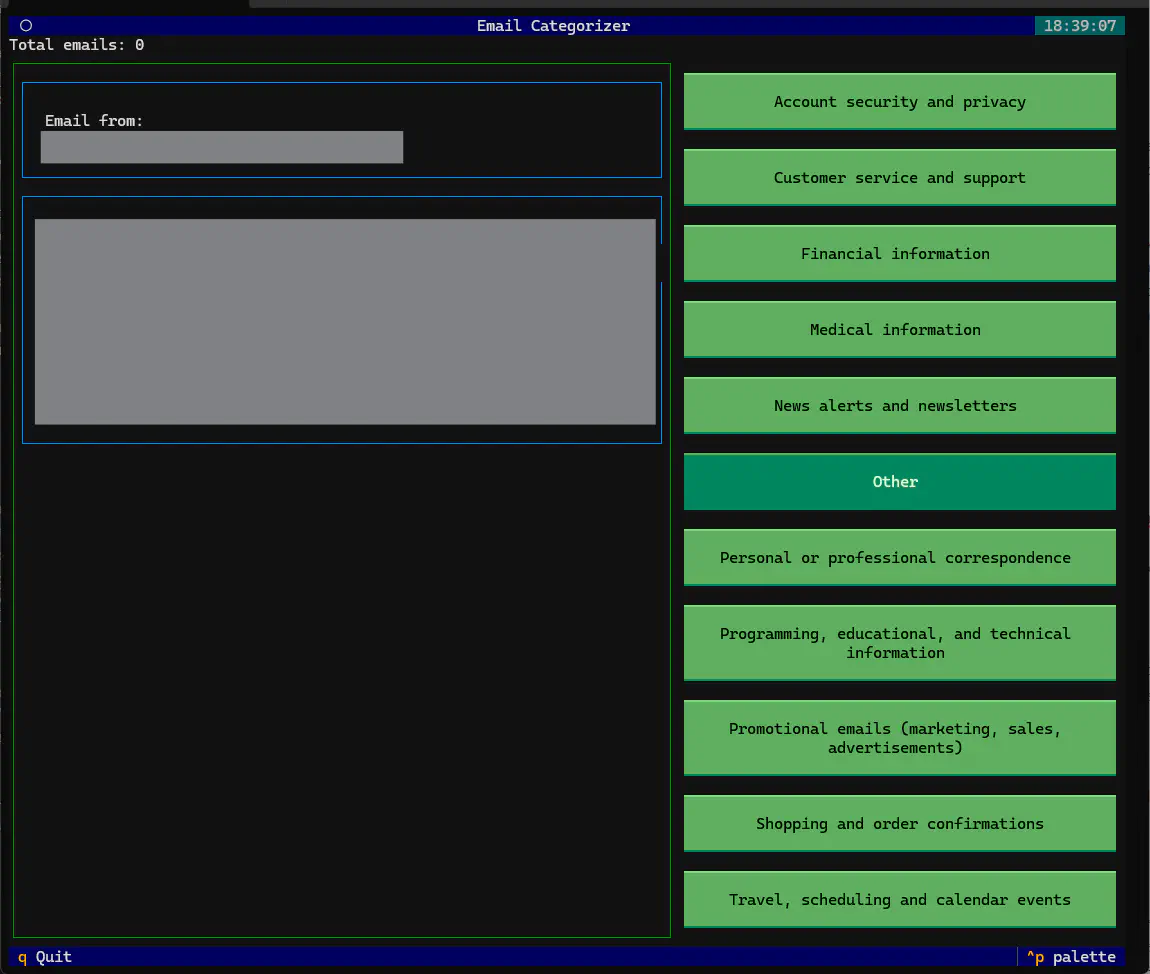

Building a labeling interface

A simple solution to hand-label the emails is to create a minimal user interface. I wanted to build something quick and dirty (I don’t really like to build user interfaces) and used this opportunity to learn the textual framework. This framework is cool because it runs in the terminal, and doesn’t require OS-specific graphical libraries or complicated installation instructions. Plus the interface didn’t need to be fancy – just buttons and text boxes.

The interface was quite straightforward. On the left side sat the email contents, and the right enumerated buttons for each category. A simple click on the category button assigned the label to the associated email, and saved it to a new DataFrame. In total I labeled ~900 emails and made sure I had at least a few samples from each category.

For many emails, I had trouble assigning just one category. I believe if I ran an experiment where I re-labeled emails in this training dataset multiple times, I probably would give a fraction of them different labels. The reality is that certain emails fit into multiple categories – for example, an email exchange with customer support to change my password could fit into either the customer service or account security category.

Performing categorization

Prompt construction

To get the most out of using LLMs, prompts should be well-constructed. There are several techniques that have been shown to improve LLM responses. For example, crafting clear and precise instructions, providing relevant context, and specifying the desired format of the response have all been shown to improve performance (see Anthropic’s guide for more information). I have also read that repeating instructions at the beginning and end can sometimes improve instruction following. My prompt specifies that the goal is email categorization and enumerates the category options with a few examples for each. The system prompt was also adapted to make sure the model produced the correct format.

Here is the system prompt:

You are an expert email categorizer and summarizer. You must categorize the following emails with a provided label. Emails are found within XML <email> tags. Respond with JSON.

And the user prompt template:

Categorize the following email using one of the provided labels. If the email does not fit any of the provided labels, use the “Other” label. Provide an alternative label using one to three words of your choice. This alternative label must be a fitting yet general category for the email. Also, provide a suggestion for the email subject using only a single word.

Here are examples of the provided labels:

- Personal or professional correspondence: emails with family, friends, or colleagues. They have a more colloquial tone.

- Financial information: bank statements, investment updates, bills, receipts, financial aid, scholarships, or grants.

- Medical information: doctor’s appointments, prescriptions, medical test results, or health insurance information.

- Programming, educational, and technical information: coding projects, GitHub, educational resources, google scholar, or technical documentation.

- News alerts and newsletters: news updates, newsletters, or subscriptions.

- Travel, scheduling and calendar events: flight confirmations, reservations, scheduling, or calendar events.

- Shopping and order confirmations: online or food shopping, order confirmations, delivery updates.

- Promotional emails (marketing, sales, advertisements): promotional emails, marketing, sales, or advertisements.

- Customer service and support: customer service, support, or help desk.

- Account security and privacy: account security, privacy, or data protection.

- Other: emails that do not fit any of the provided labels.

Here is the cleaned email body:

<email>

From: {from_}

Date: {email_date}

Subject: {subject}

Body:

<body>

{email}

</body>

</email>

Again, categorize the following email using one of the provided labels. If the email does not fit any of the provided labels, use the “Other” label. Provide an alternative label using one to three words of your choice. This alternative label must be a fitting yet general category for the email. Also, provide a suggestion for the email subject using only a single word.

The curly brackets {} indicate places where email-specific information is inserted.

Constraining responses using structured outputs

Note that the system prompt asks for responses in JSON format. This is because I’m taking advantage of a relatively recent technique that produces structured responses, or outputs, that are guaranteed to adhere to the structure of a pre-defined schema. In a nutshell, it’s a method that constrains the sampler to only sample from valid tokens, where the validity is defined by the schema. See here and llama.cpp’s documentation for more information. It is recommended to set the temperature parameter (used for sampling tokens) to 0 to reproducibly sample the most likely token.

Ollama has the capability to produce structured outputs in Python using pydantic as the recommended tool for generating the response schema:

import enum

from typing import Annotated

from pydantic import BaseModel, Field

class Labels(enum.Enum):

financial = "Financial information"

... # enumeration of rest of pre-defined labels

class Prediction(BaseModel):

# primary category

class_label: Labels

# 1-3 word alternative label

alternative_label: Annotated[str, Field(pattern="^[a-z]+( [a-z]+){0,2}$")]

# 1 word subject suggestion

subject_suggestion: Annotated[str, Field(pattern="^[a-z]+$")]

The Prediction schema asks for 3 attributes from the LLM: a class label (i.e., category), an alternative label, and a subject suggestion. We will use the class_label attribute in this project, but I added the other two attributes in case I wanted to use them for another project in the future. I had to manually define the output grammar for the latter two attributes since I haven’t pre-defined a subset of possible responses they could take.

Running the LLMs

This step took the longest because I ran the LLMs on my local machine. Some of the LLMs were much larger than the amount of VRAM available and had to be dynamically allocated between the GPU and CPU; this is a major bottleneck that slowed the larger models (>12B parameters) immensely.

The process consists of running each LLM on the set of hand-labeled emails, saving a DataFrame with the predicted labels, and moving on to the next LLM. The following pseudocode gives a rough idea:

MODELS = {} # dict containing LLMs to run - output of each LLM is saved in a different folder

# add the email to prompt, sends to LLM for structured output response

# creates a dict containing email contents and label prediction

def categorize_email(email: dict, llm_name: str) -> dict:

...

email_df = pl.read_parquet('/path/to/df.parquet') # polars DataFrame of emails

... # sort email_df so that first rows are hand-labeled emails

# partition DataFrame into chunks the length of all hand-labeled emails

for i, partition in enumerate(email_df.iter_slices(n_rows=len(hand_labeled_emails))):

for folder, model_name in MODELS.items():

output_path = Path(f'/path/to/{folder}/df_{i}.parquet')

# first, check to see if categorization has already been performed

if output_path.exists(): continue

cat_fun = partial(categorize_email, model=model_name)

# run function for each email entry

response_df = pl.DataFrame(map(cat_fun, partition.iter_rows(named=True)))

response_df.to_parquet(output_path)

LLM performance comparison

Now that the LLM predictions have been generated, we can compare their performance and ultimately choose a pair of LLMs that meet the standards of this project. The following metrics meet my needs:

- Inference speed: the speed with which an email be categorized

- Accuracy: the fraction of labels the model predicts correctly for each category

- F1 score: the harmonic mean of precision and recall. Often highly correlated with accuracy

- Consistency / response entropy: the ability to provide a consistent prediction across multiple runs on the same email

I want something that can run quickly (fast inference), accurately categorize an email (high accuracy/F1 score), and is relatively confident in its prediction (high consistency/low entropy).

Inference speed

The first metric is inference speed, measured by the time it takes to receive a full response from the LLM. I want the LLM to be fast because I’m running the LLMs locally. Response times are measured for every email and the median response time for each LLM tested is plotted below.

Interestingly, some of the smaller models take longer to respond than expected (for example, Granite-3.1-MOE-1b) while some larger models are faster than expected (like Tulu-3). Slower-than-expected speed might be caused by llama.cpp (Ollama’s LLM backend) being under optimized for certain architectures like Granite-3.1-MOE-1b’s. The larger models are slow because I don’t have enough VRAM on my GPU to hold the model without it spilling into normal RAM.

Accuracy

The most basic of classification metrics. This metric gives us a sense of how often the LLM classifies an email with the same label I gave.

According to the plot above, larger LLMs tend to be more accurate than smaller ones, which makes sense given that parameter count is often correlated with capability. Interestingly, it seems like the DeepSeek models are quite poor at email categorization. I go into more detail below, but I think it has to with how these models are trained/distilled.

F1 score

The F1 score is closely related to (and correlated with) accuracy, except it is more sensitive to classification errors. Read this for more information on the F1 score.

As expected there is a correlation with accuracy. Focusing on the differences, there seems to be a saturation in the F1 score at around 8B parameters. This suggests that we’re not going to gain much using an LLM larger than ~8B parameters to build the classifier training dataset.

Consistency, or response entropy

To compute this metric, each LLM is resampled multiple times categorize the same email; the entropy of the response distribution is measured to provide a sense of consistency, or how confident the LLM is in its prediction. A high entropy means that the LLM categorized an email with multiple labels and no label is clearly favored – in other words, an inconsistent response. This metric requires that the temperature value used in the token sampling step is set >0 (LLM companies call increasing temperature as giving the LLM “more creativity”). For this experiment, I set it pretty high – to 0.7.

Note that I did not compute this metric for the largest of the LLMs because I didn’t have the time to run them (Llama-3.3 would have taken days to finish).

The scatter plot above shows a general trend where larger LLMs tend to be more consistent in their responses. This suggests that the likelihood of the predicted category increases relative to the other categories as a function of LLM size. More generally, it could indicate that the larger an LLM is, the greater their capability for forming arbitrary decision boundaries based on an input prompt.

Combining metrics to choose the best performing LLMs

We need to choose a couple of the “best performing” LLMs. As mentioned above, we want LLMs that meet all four of our criteria. First, let’s visualize the criteria in one plot to understand visually which LLMs might be the best to choose (I’m leaving out accuracy since it’s correlated with the and provides less information than the F1 score).

This plot indicates that the fastest, most reliable, and most accurate models are probably the Granite or Tulu models. Let’s make it a bit more quantitative. By taking a weighted average of all the metrics including accuracy, we can take a slightly more data-driven approach. First, the metrics are min-max normalized so that the scale of each metric does not affect model ranking. Next, the F1 score and accuracy are inverted so that the best values are the lowest, and can be combined with the other two metrics. Note that there is some subjectivity here when setting the weights for determining metric importance.

df = pl.read_parquet('...') # load in metrics with polars

# normalize scale to 0-1

def minmax(col: pl.Expr) -> pl.Expr:

return (col - col.min()) / (col.max() - col.min())

# min-max normalize, flip f1 and accuracy

df_ranking = df.with_columns(

minmax(pl.col("Inference duration (s)")),

minmax(pl.col("entropy")),

(1 - minmax(pl.col("f1"))).alias("f1"),

(1 - minmax(pl.col("accuracy"))).alias("accuracy"),

)

# weighted average

df_ranking = df_ranking.with_columns(

(

pl.col("f1") * 0.35, # most important

pl.col("Inference duration (s)") * 0.3, # pretty important

pl.col("entropy") * 0.2, # important

pl.col("accuracy") * 0.15, # high corr. w/f1, so less important

).alias("Weighted avg.")

)

df_ranking.select(["Model", "Weighted avg."]).sort("Weighted avg.")

The top 5 models are shown below.

| Model | Weighted avg. (lower is better) |

|---|---|

| Granite-3.1-8b | 0.170267 |

| Tulu-3 | 0.186966 |

| Granite-3.1-2b | 0.193733 |

| Llama-3.1-8b | 0.24509 |

| Qwen-2.5-7b | 0.245099 |

There we have it. Our contenders for creating a training dataset for the classifier are Granite-3.1-8b and Tulu-3!

N.B.: After updating to include more models, the Granite-3.2 models are the clear winner. However, I didn’t want to re-run the classifier evaluations, so I’m sticking with 3.1 for the rest of this post.

Evaluating simple classifiers

Time for the final step – training an email category classifier on the text embeddings from the embeddings section. The goal is to build the best classifier we can using as simple a classification model as possible. I’ll:

- try a few different classifiers

- search over hyperparameters for each classifier type

- select the best performing hyperparameter set for each classifier type

- compare performance between classifiers

- compare performance between LLM labels (Granite-3.1-8b vs Tulu-3)

- compare performance between the two embeddings (

snowflakevsGTE)

I’ve selected a set of classifiers to train, ordered by simplicity:

| Classifier | Description |

|---|---|

| Logistic regression | Simple but often requires tuning, very sensitive to data scale. |

| Support vector classification (SVC) | Simple but kernel functions add a level of complexity. Class probabilities are estimates, not exact. |

| (Gaussian) Naive Bayes | Good for document classification using text-based representations like bag-of-words or frequency counting of discrete data. In this case we are in a continuous embedding space, thus we use a Gaussian prior. |

| K-nearest neighbors | Highly dependent on the training data, thus uses a lot of memory. Test data must be compared to all training data. |

| Random forest | Longest to train and often requires many decision trees to work successfully. However, is quite robust to data scale, nonlinearities and noise. |

After performing a non-exhaustive grid search, I selected the best performing hyperparameter set for each classifier type. Below I compare the results for all classifiers trained on labels from either the Tulu-3 or Granite-3.1-8b LLMs.

snowflake embedding was used for this analysis. A subset of the generated labels were selected to provide equal numbers of each category. Classifiers are ordered by increasing average performance. Violin plots represent the held-out F1 scores across 4-fold stratified cross-validation, repeated 10 times.SVC seems to outperform the other methods for classification, and generalizes well to held-out data. It is satisfying to see as SVC is a relatively simple classifier model. Additionally, classifiers trained on the labels generated by the Granite model consistently outperform those trained on the Tulu model. Therefore, we’ll select the labels generated by Granite for training the final SVC model.

GTE (purple) and snowflake (red) embedding models. SVC was used for this analysis. A subset of the generated labels were selected to provide equal numbers of each category. Violin plots and points represent the held-out F1 scores across 4-fold stratified cross-validation, repeated 10 times.Interestingly, the snowflake embedding is most amenable to this classification task. Notably, the company that trained the snowflake embedding model

optimized this embedding for retrieval-augmented generation (RAG); the embeddings are trained to be unique enough to perform accurate document retrieval, but similar enough that they can be clustered with semantically similar texts.

The final test is to see how well SVC performs on a validation dataset consisting of all the emails not used during training.

- F1 score: 0.79

- Accuracy: 0.84

It looks like the model generalizes well, and is ready to use “in production”!

Classification confidence

The SVC model was trained to predict the approximate likelihood an email is assigned to each category. Here are a few example emails showing the likelihoods of the top four categories.

Example 1:

From: “Amazon.com” <XXX>

Subject: Your Amazon.com order #XXX-XXXXXXX-XXXXXXX

| Likelihood | Category |

|---|---|

| 100% | Shopping and order confirmations |

| 0% | Travel, scheduling and calendar events |

| 0% | Customer service and support |

| 0% | Financial information |

Example 2:

From: Caitlin <XXX>

Subject: lab inspection tomorrow

| Likelihood | Category |

|---|---|

| 29% | Programming, educational, and technical information |

| 21% | Personal or professional correspondence |

| 13% | Account security and privacy |

| 13% | Other |

The model is very confident that the Amazon email is an order confirmation, while less confident that the lab inspection email belongs in the technical information category. This information is very useful, because I can use it to define a confidence threshold where the model then defers to me for the final say. For instance, I would actually classify the second example as “personal or professional correspondence”. I can take this a step further, and add any email the model defers to me to the training dataset and subsequently improve the classifier.

Final thoughts

Overall, I believe that in the right settings, LLMs can be used as a powerful tool to lower the barrier to entry for many tedious tasks – like creating a training dataset for email classification. It was interesting to observe such a striking variation in capabilities across LLMs with similar parameter counts. To me, it suggests that there is still a ways to go in improving LLM capabilities – both architecturally and improving training recipes.

Timing

Using LLMs to assist in generating training data for simpler models can be a highly valuable resource. If not for LLMs, it would have taken me much longer to hand label enough emails to build robust classifiers. In addition to human-in-the-loop model training, I can foresee LLM-in-the-loop training being a useful time-saving tool in the future. This project took a while to finish, but I believe it would have taken longer without LLMs to help me build a training dataset.

DeepSeek model performance

Initially, I was surprised that the distilled DeepSeek models performed so poorly in this task. After thinking about it, I reasoned that these models were optimized to <think>; I was forcing the model to respond early (via structured outputs) at a time where the model has been trained think. This likely leads to a sub-optimal token selection. Reasoning LLMs aren’t meant for classification and structured output generation. One modification I could make to my pipeline is to allow these models to <think> first, and then add the output to my prompt for structured output generation. However, this defeats the purpose of finding a model that is fast and accurate enough go generate a training dataset.

When are LLMs useful?

It’s my belief that LLMs should not replace other effective and efficient technologies. An LLM might perform a task with similar effectiveness, is often much less efficient in both energy consumption and time. As LLMs are in their infancy, we are understanding what they are capable of. In this process, we’re experimenting with replacing well established technologies with LLMs (see for example: this GitHub repo) at a major cost: energy and efficiency. In the future, I want to see LLMs or their successors incorporate “classical” and proven methods into their own pipelines, like classification.

Privacy

As enterprise language models become more powerful, the companies behind them do as well. It’s difficult to trust a company, as their values often don’t align with individual peoples’. Eventually, with enough data, a company could figure out how to subtly manipulate you through their chat services. The more you chat with their chatbot, the more information they have about what you think about, how you think, what you know or don’t know. Then, the LLM could guide your thinking, omit certain information, or even provide false evidence to align your thoughts with the company. Personally, I see myself asking questions to LLMs that I would normally type into Google, because it’s faster and easier to read the LLM’s response. This is a dangerous road that can be partially mitigated by relying on local LLMs rather than enterprise ones. There’s more flexibility for you to control how the LLM is used (regardless of the implicit goals or biases that might exist within the local LLM).

Future work

This post only covered up to training and evaluating the lightweight email categorization classifier. The next steps are to actually use it, and implement a simple system to retrain the classifier using emails it wasn’t confident in predicting.

Caveats

One significant caveat worth noting is that during the process of hand-labeling emails, I had trouble accurately assigning labels to certain messages, which introduces uncertainty in the upper-bound performance of the LLMs tested in this project.

Bias

I acknowledge that my email labeling is subjectively biased, and likely influenced the outcome of this project. I can live with this because it (hopefully) doesn’t affect anyone but me.

Quantization

The LLMs were used at different degrees of quantization, due to the fact that I have limited VRAM on my GPU. The smallest models were quantized to q8_0, whereas the largest were quantized to q3_K_M. It is known that quantization affects LLM performance, and therefore should be taken into account when interpreting these results. Degree of quantization is shown in the Appendix below as a part of the model name found on Ollama.

Inadequate prompt engineering

I suspect that incorporating positive and negative category examples in the prompts has the potential to improve the LLM’s categorization performance. Other prompting techniques may have helped as well.

Appendix

Model table. Shows how the models named in this post map onto the models pulled from Ollama, with their parameter counts.

| Model name | Name on Ollama | Param. count (B) |

|---|---|---|

| Command-R-7b | command-r7b:latest | 8.03 |

| DeepSeek-r1-distill-1.5b | deepseek-r1:1.5b | 1.78 |

| DeepSeek-r1-distill-7b | deepseek-r1:7b | 7.62 |

| DeepSeek-r1-distill-8b | deepseek-r1:8b | 8.03 |

| Dolphin-3 | dolphin3:latest | 8.03 |

| Falcon-3-3b | falcon3:3b | 3.23 |

| Falcon-3-7b | falcon3:latest | 7.46 |

| Gemma-2 | gemma2:latest | 9.24 |

| Gemma-3-1b | gemma3:1b | 1.0 |

| Gemma-3-4b | gemma3:4b | 4.3 |

| Gemma-3-12b | gemma3:12b | 12.2 |

| Gemma-3-27b | gemma3:27b | 27.4 |

| Granite-3.1-2b | granite3.1-dense:2b | 2.53 |

| Granite-3.1-8b | granite3.1-dense:latest | 8.17 |

| Granite-3.1-MOE-1b | granite3.1-moe:1b | 1.33 |

| Granite-3.1-MOE-3b | granite3.1-moe:3b | 3.3 |

| Granite-3.2-2b | granite3.2:2b-instruct-q8_0 | 2.53 |

| Granite-3.2-8b | granite3.2:8b | 8.17 |

| InternLM-3 | lly/InternLM3-8B-Instruct:8b-instruct-q4_k_m | 8.8 |

| Llama-3.1-8b | llama3.1:8b-instruct-q4_K_M | 8.03 |

| Llama-3.2-1b | llama3.2:1b | 1.24 |

| Llama-3.2-3b | llama3.2:3b-instruct-q8_0 | 3.21 |

| Llama-3.3 | llama3.3:70b-instruct-q3_K_M | 70.6 |

| Marco-o1 | marco-o1:latest | 7.62 |

| Mistral-7b | mistral:7b | 7.25 |

| Mistral-small-3 | mistral-small:latest | 23.6 |

| Nemo | mistral-nemo:12b-instruct-2407-q4_K_M | 12.2 |

| Olmo-2 | olmo2:7b | 7.3 |

| Phi-4 | phi4:latest | 14.7 |

| Phi-4-mini | phi4-mini:3.8b-q8_0 | 3.84 |

| Qwen-2.5-0.5b | qwen2.5:0.5b-instruct-q8_0 | 0.494 |

| Qwen-2.5-1.5b | qwen2.5:1.5b-instruct-q8_0 | 1.54 |

| Qwen-2.5-3b | qwen2.5:3b | 3.09 |

| Qwen-2.5-7b | qwen2.5:7b | 7.62 |

| Qwen-2.5-32b | qwen2.5:32b-instruct-q3_K_M | 32.8 |

| QwQ | qwq:latest | 32.8 |

| Smallthinker | smallthinker:latest | 3.4 |

| SmolLM-2-360m | smollm2:360m | 0.362 |

| SmolLM-2-1.7b | smollm2:latest | 1.71 |

| Tulu-3 | tulu3:latest | 8.03 |

| Tulu-3.1 | hf.co/bartowski/allenai_Llama-3.1-Tulu-3.1-8B-GGUF:Q6_K | 8.03 |

Shared Ollama parameters for all LLM-based text generation:

| Parameter | Value |

|---|---|

| Context size | 13,000 tokens |

| Temperature (default value used) | 0 |

| Temperature (only for consistency analysis) | 0.75 |

| JSON schema for structured output | GitHub link |

Computer specs:

- AMD Ryzen 7 3700X 8-Core 3.6 GHz

- NVIDIA RTX 3080Ti (12 GB VRAM)

- 96 GB DRAM

- WSL 2 (Debian) on Windows 11